Project - Stage 2 - Benchmark - aarchie

**This post is re-written benchmark results. Previously blogged benchmark results were not large enough for measurement and comparison purpose in Sage 3.

For the purpose of meeting the deadline only the benchmark results from aarchie is posted, but I will add results from other platforms as well.**

**At the time of benchmarking there were multiple users using on the aarchie server. The performance may differ if done with no other users**

Files used:

- sample.txt (1GB)

- largeImage.jpg (41.03MB)

To create large txt file

base64 /dev/urandom | head -c 1024000000 > sample.txt

This benchmark is to measure the time taken to compress large text file and large image size.

**I will not include compression of directory as bzip2 is not able to compress directory. Directory is compressed with the help of tar. As I want to test the performance of bzip2 only, I will be compressing large sized files individually.**

What is used to for measurements:

- I used time command and will use real time as a benchmark

- I used perf to generate .data file and converted it to svg call graph. Using this graph I identified hot spots of the program to investigate possibilities of algorithmic optimization.

How I conducted benchmarks:

- using for loop on terminal I ran following command to execute compression multiple times

for((x=1; x < 8; x++)); do time perf record --call-graph=dwarf -o test$x.data ./bzip2 -c sample.txt > sample$x.txt.bz2; done

- -c flag allows bzip2 to compress the file and keep the original file

- > sample$x.txt.bz2 is to name the compressed file

- out of 8 compression first 2 is to allow the cache to get warmed up with the file data and last 5 will be used as benchmark values

To generate call graph svg files

**I decided to discard the first and last results for sample.txt due to message "Warning: Processed 931916 events and lost 1 chunks! Check IO/CPU overload!"

Based on this message it is possible that last execution did not compress properly. For this reason I chose middle 5 results as benchmark values**

Results

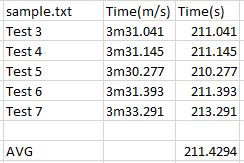

sample.txt

largeImage.jpeg

Comments

Post a Comment